If someone had asked you in winter 2019 your views on gain-of-function research, you would likely have given them a blank look. But since the Covid pandemic, and with the Wall Street Journal revealing in February that the US Department of Energy now thinks Covid-19 is likely to have come from the Wuhan Institute of Virology, gain-of-function research — often conducted to make viruses more infectious and more deadly — is a matter of enormous significance and should be at the forefront of a national conversation about the very real risks it poses.

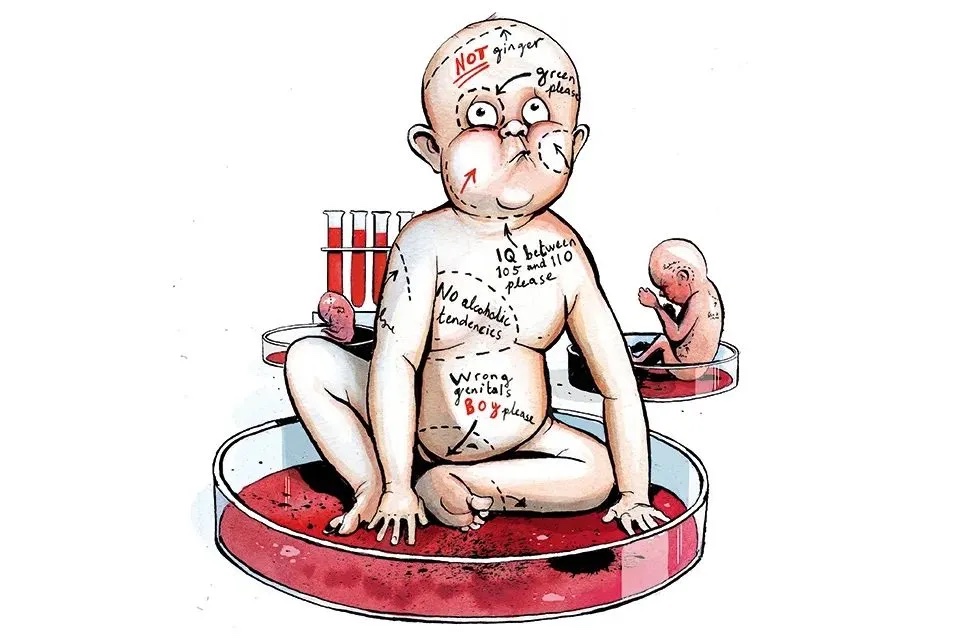

For more than a century virologists have worked to identify and understand viruses, whether or not they’re pathogens, for reasons ranging from pure science to applications in everything from agriculture to vaccines. “Gain-of-function” research takes things further and involves creating “changes resulting in the enhancement or acquisition of new biological functions or phenotypes.” The worry about a grisly “science gone wrong” scenario that could result from messing around with pathogens has existed even longer — indeed, it doesn’t take a wild imagination to see that a lab disaster could cause a real-world catastrophe.

And in 2019, along came Covid-19, killing around 7 million people globally — a catastrophe, to be sure, but with a fatality rate of under 1 percent, far from the worst-case scenario. For reference, think about H5N1, or avian influenza, whose human case-fatality rate stands near a terrifying 60 percent. While H5N1 is very rarely transmitted between humans, the nightmare situation is plenty terrifying: What if a group of enterprising scientists succeeded when they tried to find out if they could make H5N1 transmissible through the air? The resulting pandemic might very well wipe out most of humanity within a few short (very nasty, very brutish) months.

The disturbing reality is that scientists have already more or less done that. In 2012, not one but two separate groups of scientists, led by Ron Fouchier in the Netherlands and Yoshihiro Kawaoka in Japan, infected ferrets with H5N1 — mustelids are not only more closely related to humans than birds, they’re famously vulnerable to influenza. The virus adapted to the mammals in a way that indicated it could infect humans through droplets. These experiments were so “successful” that the US National Science Advisory Board for Biosafety, or NSABB, an independent committee that advises the Department of Health and Human Services, delayed publication of the results. Publication of the papers, the NSABB noted, “could potentially enable replication of experiments that had enhanced transmissibility of H5N1 influenza (in ferrets) by those who might wish to do harm.”

It took little more than two months for NSABB to be overruled by then-NIH chief Francis Collins and then-head of the National Institute of Allergies and Infectious Diseases Anthony Fauci. From that moment, the gain-of-function gloves were off. Since the publication of those two papers in spring 2012 gain-of-function research has not only become orders of magnitude more sophisticated, it has become much more widespread — and more dangerous.

For its proponents, gain-of-function research gives scientists a virological crystal ball, which helps them understand which viruses could pose a major risk to human populations. With this research in hand, proponents argue, governments can create surveillance programs geared to these specific pathogens, as well as dedicate more resources to vaccine research to defend against them.

But opponents of gain-of-function research argue that this approach has never been vindicated by real-world results. The Covid-19 pandemic is a tragic proof point: while the US runs a billion-dollar virus surveillance effort, it was local Chinese doctors who identified the virus and raised the alarm. And prior experimentation on coronaviruses, these advocates argue, provided no benefit to the creation of the Covid-19 vaccines. Instead, these experiments just create more risk.

“Gain-of-function and synthetic biology are increasingly risky because the technology has advanced in quantum leaps in the past decade especially; the cost has become much lower and the range of actors who could utilize published, open-access methodology for performing such research is limitless,” says Professor Raina MacIntyre, an epidemiologist who serves as principal research fellow at Australia’s National Health and Medical Research Council. Along with other notable figures in the field, MacIntyre is part of Biosafety Now, an organization advocating for greater oversight and regulation of gain-of-function and synthetic biological research.

There’s good reason for such alarm. Risky virus research being done around the world spans the epidemiological spectrum, including at the highest level of biosafety, BSL-4. Such projects include work on highly contagious pathogens like orthopoxviruses and influenza as well as some with astronomical fatality rates — hemorrhagic fevers like Ebola and Marburg, which can kill up to nine in ten of those it infects. Last year, an American researcher testified in a Senate hearing that Chinese researchers were experimenting with Nipah, a bat virus that is one of the world’s most deadly and a CDC-designated bioterror agent.

Now there are dozens of ongoing coronavirus studies. Most at BSL-4 level are focused on SARS-CoV-2, the Covid-19 virus. Last year, there was understandable outrage when it was revealed that researchers at Boston’s NEIDL, one of America’s four biocontainment facilities, had — in the middle of the pandemic — performed gain-of-function research on SARS-CoV-2, combining two strains to make an even more dangerous new artificial one (prepandemic research on coronaviruses at the Wuhan Institute of Virology took place at BSL-2, the safety level used to study salmonella.)

At the heart of such research lies an idea that can be difficult to understand: danger is not an incidental byproduct of the research; it is central to it. “The nature of this work is to start with a potential pandemic pathogen and enhance either its ability to transmit or its ability to cause disease,” says Richard Ebright, a molecular biologist at Rutgers who has been a vocal opponent of gain-of-function research. “These are pathogens that are not present in nature, not circulating in nature, not circulating in humans or in livestock, in crops or even in the wild. These are pathogens that might not come to exist in years, decades, centuries or millennia, but which are brought into existence through laboratory manipulation.”

This notion — creating viruses that can cause a pandemic in order to study how they behave — is easy to miss because there is no scientific equivalent, even in weapons research. Ebright, also a member of Biosafety Now, characterizes this laboratory risk as “existential, extinction-level risk.”

And much of it is, of course, funded by taxpayer dollars; even today, there is still almost no congressional oversight into what kind of virological experimentation is being done, or how it’s funded.

Part of the explanation lies in the events of September 2001, when, a week after the terrorist attacks in New York and Washington, letters containing a suspicious white powder were mailed around the country. In early October, Sun photojournalist Bob Stevens was hospitalized in southern Florida with flu-like symptoms. The next day, he was dead of pulmonary anthrax.

Over the next three weeks, letters containing white powder showed up at major broadcast newsrooms and the offices of US senators. Four more people died from anthrax. With Ground Zero still smoldering, the national security apparatus was ablaze with fear of a new kind of warfare that key figures, notably vice president Dick Cheney, had obsessed over for years. October 2001 didn’t merely change America’s biodefense posture: It altered the course of the biological sciences forever.

After 2001, virus research surged: In 1997 the government’s biosecurity budget was $137 million; by 2004 it had grown more than 3,000 percent, with the federal government spending $14.5 billion in the period. The US made a modest first attempt at imposing regulation on infectious-disease research in 1997 when it forbade the transfer of certain kinds of viruses, bacteria and toxins between labs without CDC approval, after an American researcher requested a strain of plague from an NGO that stores microorganisms.

Despite increased biodefense spending, regulation has lagged. As research funding ramped up, the Bush administration was busy reorganizing America’s biodefense structure — by putting it into a silo. That silo was the National Institute for Allergy and Infectious Disease, headed by Anthony Fauci, who, almost overnight, became the most powerful person in America’s science establishment. Far from the kindly pandemic savior he’s been portrayed as by parts of the media, Fauci had long been America’s biodefense chief.

It’s this that explains how, with relative ease, Fauci and his NIH boss Francis Collins — both of whom Ebright calls “ardent” proponents of gain-of-function research — were able to override the NSABB’s decision to halt the publication of the 2012 bird-flu experiments. Fauci called the shots in biodefense, and everyone knew it. This top-down structure defanged regulation, but it also had another even more worrisome effect: it made biodefense oversight nobody’s problem. According to Ebright, the molecular life-sciences silo has been perceived by policymakers as “impenetrable,” both because of its national security implications and its technical nature. “After Cheney and Bush were gone,” says Ebright, “their [biodefense] agenda continued in place. It was tempered at the edges under the Obama administration and ignored during the Trump administration and Biden administration, but it has continued largely as it was set out.”

The pandemic changed that calculus, upsetting the status quo in ways that even loyalists find difficult to resist. In Congress, this has translated into a wave of oversight that the biodefense sector has never before encountered. In 2021, Iowa senator Joni Ernst introduced the Fairness and Accountability in Underwriting Chinese Institutions (FAUCI) Act that would ban gain-of-function research funding. Jim Comer, now chairman of the House Committee on Oversight and Reform, and Jim Jordan, chairman of the House Judiciary Committee, have used their powers to bring new information to light, including with calls for testimony. And Florida senator Marco Rubio last year called for Harvard to clarify its involvement with Fauci and the Chinese real estate firm Evergrande (in response to my reporting for The Spectator).

What’s clear, however, is that the political impetus remains squarely on one side of the aisle. That is largely to do with an equivalent of “Trump Derangement Syndrome” that we might call the Fauci Effect. “Anthony Fauci had the great privilege in 2020 of sharing a screen with Donald Trump,” says Ebright. “He face-palmed on television once and that made him a Democratic Party icon to those unaware of his actual role and his actual actions.”

While Fauci’s famous face-palm at a March 2020 press conference certainly helped his cause, it’s only part of the story. The other part is money. As biodefense funding has ramped up, a quiet but effective lobbying effort has bloomed around it. The heavyweight in the field is the Bipartisan Commission on Biodefense, or BCB, an organization whose “team” web page lists a who’s-who of DC powerbrokers, including co-chairs Joseph Lieberman and Tom Ridge and commissioners Donna Shalala, Tom Daschle and Fred Upton. Donors include Danish pharmaceutical company Bavarian Nordic, which makes vaccines for viruses like monkeypox and Ebola; the Biotechnology Innovation Organization, a biotech trade association; and Open Philanthropy, the philanthropy of Facebook co-founder Dustin Moskowitz and his wife Cari Tuna.

The group’s influence became fully evident in summer 2021, when the Biden administration announced a $65 billion pandemic-preparedness program that used space exploration as its organizing metaphor: Only months earlier, in January, the BCB released “The Apollo Program for Biodefense,” which advanced the idea that the US must embrace the ethos of the Apollo program to effectively fight pandemics.

While top-down influence in Washington has pushed for more funding, more research and more risk, grassroots activism opposing this advance is spreading. NEIDL, the Boston biocontainment facility where gain-of-function research produced a new SARS-CoV-2 variant during the pandemic, has faced local opposition since its inception, with residents, city council members and experts weighing in. “We are talking about bringing some of the most deadly agents into a very, very highly densely populated area in the inner city,” a Boston city councilor said.

Such concerns have been supported by a number of leaks from NEIDL, including reports on lab mice and a series of lab-related infections. But the weight of the biodefense establishment, and the influence of Boston University, eventually overcame years of lawsuits and activism. More importantly, the creation of biosafety labs has continued apace, with new ones springing up around the country, many run by private research companies subject to much less oversight than big government BSL-4 labs that are impossible to keep out of the public eye.

The reason for the lack of oversight is that biosafety labs come in so many shapes and sizes, from those run by pharmaceutical labs to closed military sites to academic institutions like NEIDL, that creating regulation to cover all areas is a task authorities have been unwilling to confront. But that hasn’t made the need less pressing.

“People in the community have questions [about gain-of-function research] that our profession isn’t providing answers for,” says Bryce Nickels, a researcher at Rutgers’s Waksman Institute of Technology and a principal at Biosafety Now. “We have an issue where biolabs are doing research, the public doesn’t know what they’re doing, the scientists who could tell them what they’re doing don’t want to, and it leads to this erosion of trust. And maybe that trust can never be restored again.”

Regardless of new pushback, gain-of-function research isn’t going anywhere but forward. What’s clear is that the public is awakening to the gain-of-function arms race — some military, some private, some scientific. Unlike the nuclear arms race, which requires massive resources, this race can be pursued in tiny spaces, with relatively small budgets. Despite this, the effects of error or unforeseen outcomes will be nothing short of global.

This article was originally published in The Spectator’s April 2023 World edition.