Over the past few weeks, my sense of the surreal has been increasing. At a time when rational interpretation of the COVID data indicates that we should be getting back to normal, we instead see an elaboration of arbitrary responses. These are invariably explained as being ‘guided by science’. In fact, they are doing something rather different: being guided by models, bad data and subjective opinion. Some of those claiming to be ‘following the science’ seem not to understand the meaning of the word.

At the outset, we were told the virus was so pernicious that it could, if not confronted, claim millions of lives. Its fatality rate was estimated by the World Health Organization at 3.4 percent. Then from various sources, we heard 0.9 percent, followed by 0.6 percent. It could yet settle closer to 0.1 percent — similar to seasonal flu — once we get a better understanding of milder, undetected cases and how many deaths it actually caused (rather than deaths where the virus was present). How can this be, you might ask, given the huge death toll? Surely the figure of 44,000 COVID deaths offers proof that calamity has struck?

But let us look at the data. Compare this April with last and yes, you will find an enormous number of ‘excess deaths’. But go to the Office for National Statistics website and look up deaths in the winter/spring seasons for the past 27 years, and then adjust for population. This year comes only eighth in terms of deaths. So we ought to put it in perspective.

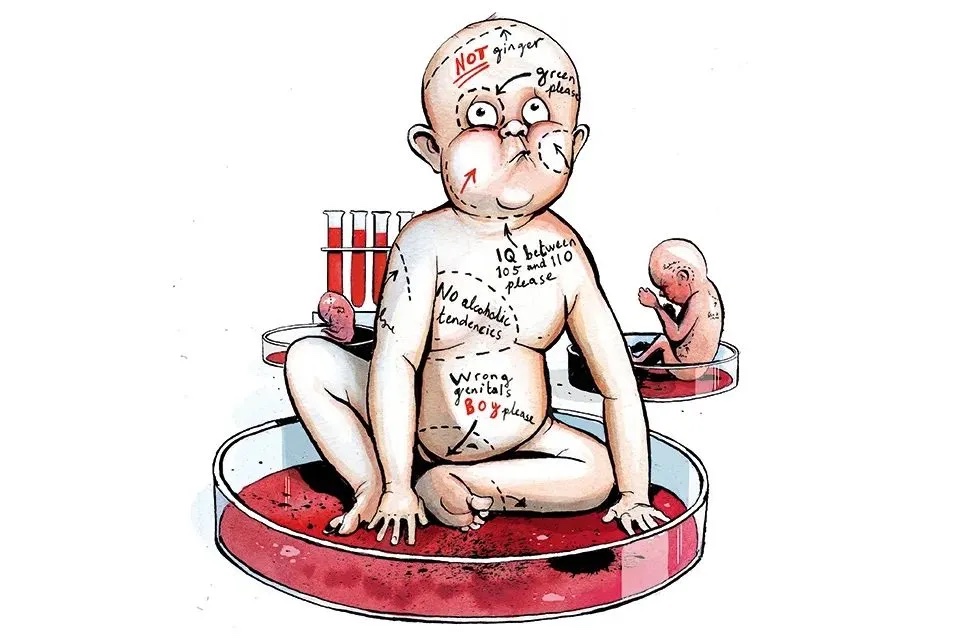

Viruses have been chasing men since before we climbed down from the trees. Our bodies fight them off and learn in the process. We get sick. It’s horrible, sometimes fatal. But viruses recede, our body’s defenses learn and strengthen. The process has been happening for millions of years, which is why more than 40 per cent of our genome is made of incorporated viral genetic material. The spread of viruses like COVID-19 is not new. What’s new is our response.

Now we have new tools that let us spot (and name) new viruses. We watch their progress in real time, plotting their journeys across the world, then sharing the scariest stories on social media. So the standard progress of a virus can, in this way, be made to look like a zombie movie. The whole COVID drama has really been a crisis of awareness of what viruses normally do, rather than a crisis caused by an abnormally lethal new bug.

Let’s go back to the idea of COVID taking half a million lives: a figure produced by modeling. But how does modeling relate to ‘the science’ we heard so much about? An important point — often overlooked — is that modeling is not science, for the simple reason that a prediction made by a scientist (using a model or not) is just opinion.

To be classified as science, a prediction or theory needs to be able to be tested, and potentially falsified. Einstein is revered as a great scientist not just for the complexity of general relativity but because of the way his theory enabled scientists to predict things. This forward-looking capacity, repeatedly verified, is what makes it a scientific theory.

The ability to look backwards and retrofit a theory to the data via a model is not nearly so impressive. Take, for example, a curve describing the way a virus affects a population in terms of the number of infections (or deaths). We can use models to claim that lockdown had a dramatic effect on the spread, or none at all. We can use them to claim that social distancing was vital, or useless. Imperial College London has looked at the data and claimed lockdown has saved three million lives. A study from Massachusetts looked at the data and concluded that lockdown ‘might not have saved any life’. We can, through modeling alone, choose pretty much any version of the past we like.

The only way to get an idea of the real-world accuracy of models is by using them to predict what will happen — and then by testing those predictions. And this is the third problem with the current approach: a willful determination to ignore the quality of the information being used to set COVID policy.

In medical science there is a well-known classification of data quality known as ‘the hierarchy of evidence’. This seven-level system gives an idea of how much weight can be placed on any given study or recommendation. Near the top, at Level 2, we find randomized controlled trials (RCTs) where a new approach is tried on a group of patients and compared with (for example) a placebo. The results of such studies are pretty reliable, with little room for bias to creep in. A systematic review of several RCTs is the highest, most reliable form of medical evidence: Level 1.

Further down (Levels 5 and 6) comes evidence from much less compelling, descriptive-only studies looking for a pattern, without using controls. This is where we find virtually all evidence pertaining to COVID-19 policy: lockdown, social distancing, face masks, quarantine, R-numbers, second waves, you name it. And — to speed things up — most COVID research was not peer-reviewed.

***

Get a print and digital subscription to The Spectator.

Try a month free, then just $7.99 a month

***

Right at the bottom of the hierarchy — Level 7 — is the opinion of authorities or reports of expert committees. This is because, among other things, ‘authorities’ often fail to change their minds in the face of new evidence. Committees, containing diversity of opinion and inevitably being cautious, often issue compromise recommendations that are scientifically non-valid. Ministers talk about ‘following the science’. But the advice of Sage (or any committee of scientists) is the least reliable form of evidence there is.

Such is the quality of decision-making in the process generating our lockdown narrative. An early maintained but exaggerated belief in the lethality of the virus reinforced by modeling that was almost data-free, then amplified by further modeling with no proven predictive value. All summed up by recommendations from a committee based on qualitative data that hasn’t even been peer-reviewed.

Mistakes were inevitable at the start of this. But we can’t learn without recognizing them.

This article was originally published in The Spectator’s UK magazine. Subscribe to the US edition here.