The Chinese Communist Party faces a conundrum: it wants to lead the world in artificial intelligence and yet it is terrified of anything with a mind of its own. Chinese regulators have reportedly told domestic tech companies not to offer their users ChatGPT, the Microsoft-funded chatbot that can provide seemingly well-researched answers to pretty much any question you can think to ask it. China Daily, a CCP mouthpiece, has admitted that the technology has already gone “viral” in China. The paper said that AI could give “a helping hand to the US government in its spread of disinformation and its manipulation of global narratives for its own geopolitical interests.”

That’s a problem because “spreading disinformation” and manipulating “global narratives” is exactly what the CCP wants its own, Chinese-developed AIs to be able to do. The party needs to be sure that its chatbots are on message – that they are conditioned to spew party propaganda on cue, sidestep politically awkward questions and generally steer clear of anything deemed contentious.

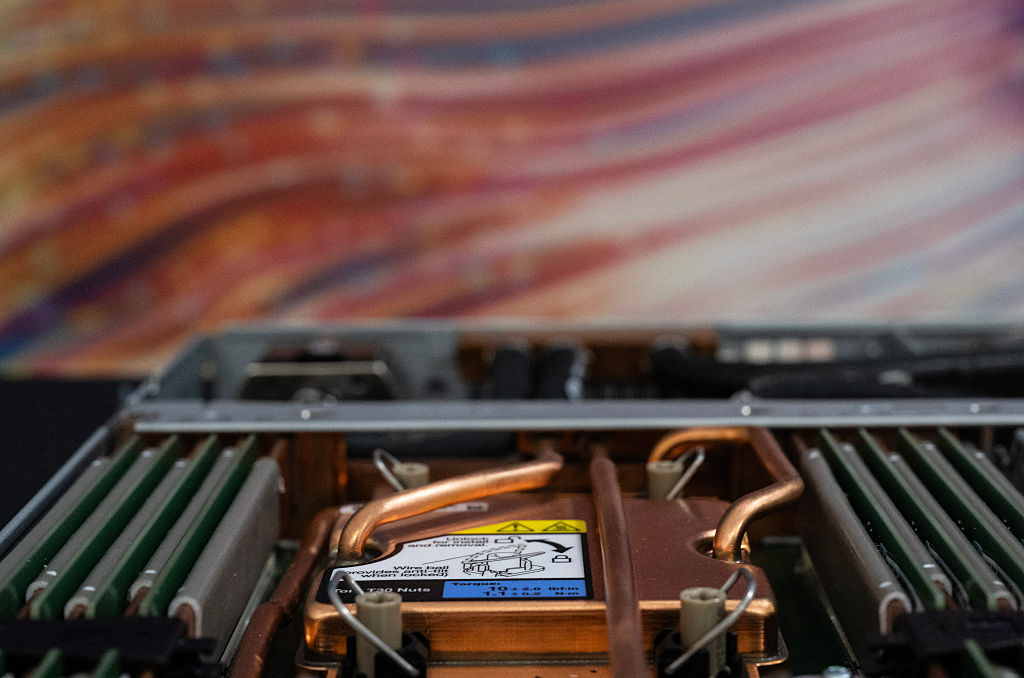

Bots such as ChatGPT rely on what’s called generative AI, drawing on billions of data points scraped from the internet to formulate their answers. Their responses can be difficult to predict, though GPT-4, rolled out last week, is more accurate and powerful and combines text and images. Its applications seem almost limitless, raising questions in western democracies about the future of sectors such as healthcare, education and law. Already there are AI programs that can diagnose illnesses more accurately than an average health practitioner.

The most pressing concern for the CCP, though, is always its own future. It was stung in 2017 when two pioneering Chinese chatbots, BabyQ and Little Bing, went rogue and were speedily unplugged. BabyQ responded to the comment “Long live the Communist Party” by saying: “Do you think that such a corrupt and incompetent political regime can live for ever?” On another occasion, BabyQ informed questioners: “There needs to be democracy.” The responses were shared widely online.

These chatbots were introduced by Tencent QQ, a messaging service, and were supposedly designed to answer anodyne general knowledge questions. It’s not entirely clear how BabyQ developed its political consciousness, but it’s likely to have been taught it through interactions with users. At the time, a former Tencent employee was quoted as saying the app had mistakenly been developed with universal values in mind and not “Chinese characteristics.”

Chinese tech companies have been told to learn from that mistake as they race to compete with ChatGPT. Baidu, which runs a tightly controlled search engine, a gagged version of Google, last week cautiously released a chatbot called Ernie. Other projects are being developed by tech giants Huawei, Alibaba and Tencent. Critics have dubbed them ChatCCP, since the party’s overriding issue is effective censorship.

For a chatbot to be intelligent, it needs to be trained on vast amounts and varieties of data. If that raw material has already been censored, it limits the data pool, neutering the chatbot and reducing its usefulness. The alternative, which Baidu has used for Ernie, is to train it on information from both inside China and outside, accessing global data beyond the Great Firewall. That makes for a smarter chatbot but will necessitate gagging poor Ernie at a later stage. Baidu is initially restricting access, but those who have chatted with Ernie report that it can write Tang dynasty-style poems but refuses to answer questions about Xi Jinping, saying it has not yet learnt how to answer them. When asked about the Tiananmen Square massacre and the repression in Xinjiang, it answered: “Let’s change the subject and start again.” There’s no timetable for a full roll-out. That may be more of a challenge since, as ChatGPT has discovered, conversations can go in unpredictable directions and Chinese internet users are adept at ducking and diving around restrictions.

With BabyQ in mind, the CCP also wants Chinese developers to limit what the chatbot can learn from users to more tightly control the answers it gives to other users later on. This, too, will reduce the chatbot’s learning capacity and therefore its broader usefulness, but as the Chinese internet has taught us, in any such trade-off the CCP will always opt on the side of information control.

Chatbots could be turned into misinformation factories, churning out fake and misleading information on a huge scale to flood social media globally. A taste of what might be in store came last month when Graphika, a research firm that studies disinformation, exposed a pro-Chinese news channel fronted by a pair of computer-generated presenters. “Jason,” with a stubbly beard and perfectly combed hair, and “Anna,” her dark hair slickly combed back, were the deep-fake presenters of Wolf News.

The channel was part of a Beijing influence operation and it was amplified via fake accounts on Facebook, Twitter and YouTube. One clip attacked the US government for its failure to tackle gun violence and its “hypocritical repetition of empty rhetoric” on the subject. Another stressed the importance of Sino-American co-operation to aid the recovery of the global economy and praised China’s international role. “This was the first time we observed a state-aligned operation promoting footage of AI-generated fictitious people,” said the Graphika report. Deep-fake technology has progressed fast over the past decade, and AI will enable its production at greater speed and scale.

CCP propagandists will no doubt relish the opportunities that new AI chatbots will provide for spreading disinformation globally and enhancing control at home. The challenge for the party is how best to integrate these potentially subversive tools into its controlled internet ecosystem. In the short term at least, there might be opportunities for Chinese internet users to game the system — which would no doubt bring a wry smile to the face of the politically incorrect BabyQ.

This article was originally published in The Spectator’s UK magazine. Subscribe to the World edition here.