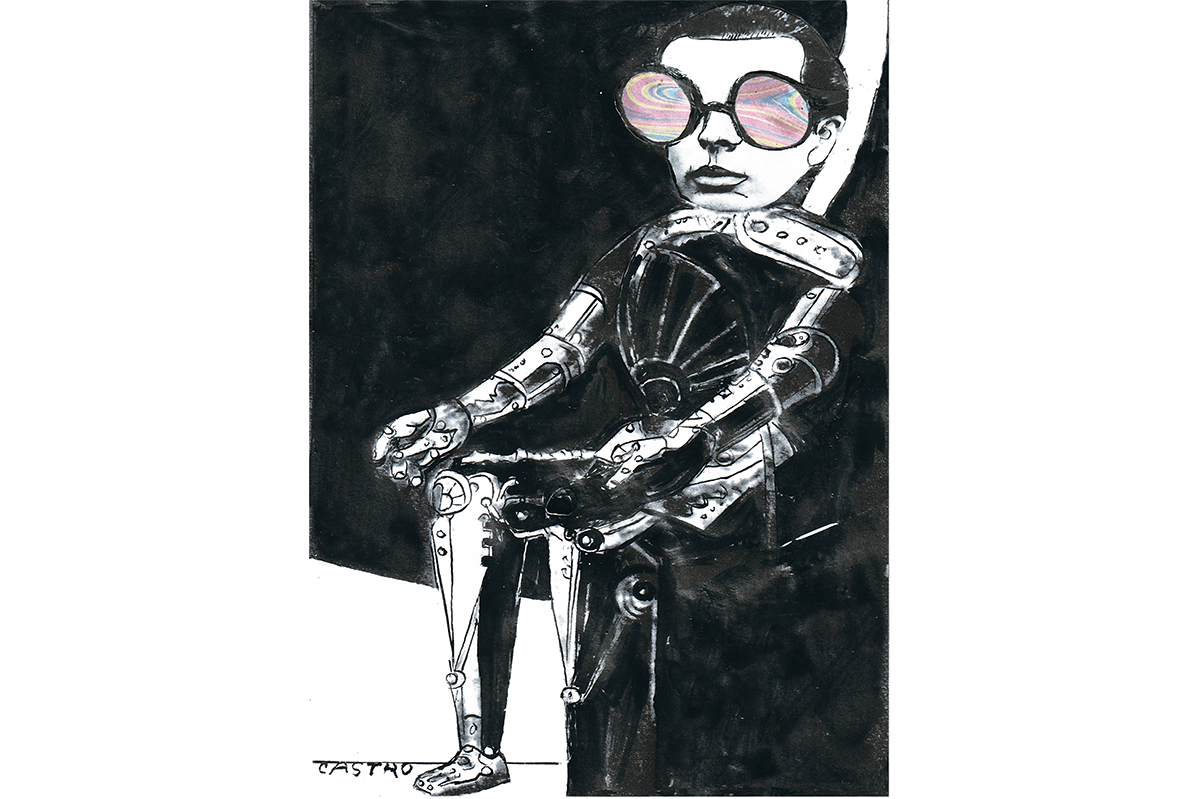

Mary Shelley was challenged by Lord Byron to write a ghost story during a summer of “incessant rainfall” on Lake Geneva in 1816. She came up with something far more interesting than a mere ghost story: the tale of Dr. Frankenstein, a scientist who creates life by reanimating a corpse. Shelley, who was just 18 at the time, was horrified by her “waking dream.” The thought that man could “mock” God’s creation of life was “supremely frightful.” Some of the scientists building artificial intelligence today believe they, too, might be creating life. The implications are frightening – and not just because an AI might decide to kill us all. What if we could hurt the AI?

Google’s AI chatbot, Gemini, tells me that Shelley’s Frankenstein contains a “profound message” about the ethics of scientific advances. These ethical concerns aren’t just about the dangers the monster might pose to us, but the dangers we might pose to the monster. It says: “Creators have a duty to ensure the well-being of their creations.”

As others have pointed out, the important thing about Frankenstein’s monster is not just that it is alive, but that it is conscious. Some in Silicon Valley think there’s a chance their machines will one day become conscious, or at least self-aware. Then, computers might one day have rights. There may come a day where you could even get sued by an AI for infringing its rights.

If that sounds crazy, then consider that one of the world’s leading AI companies, Anthropic, has begun a new research effort on AI “welfare.” It is trying to find out if machines with superintelligence could feel something like fear if threatened, something like pain if harmed. That’s if they can be harmed, not just damaged. If advanced machine minds can decide they like some things and hate others, it may be that they could also have free will. These are tired clichés from science fiction, but freshly astonishing in light of predictions that such minds could be among us, not in some far distant future, but soon.

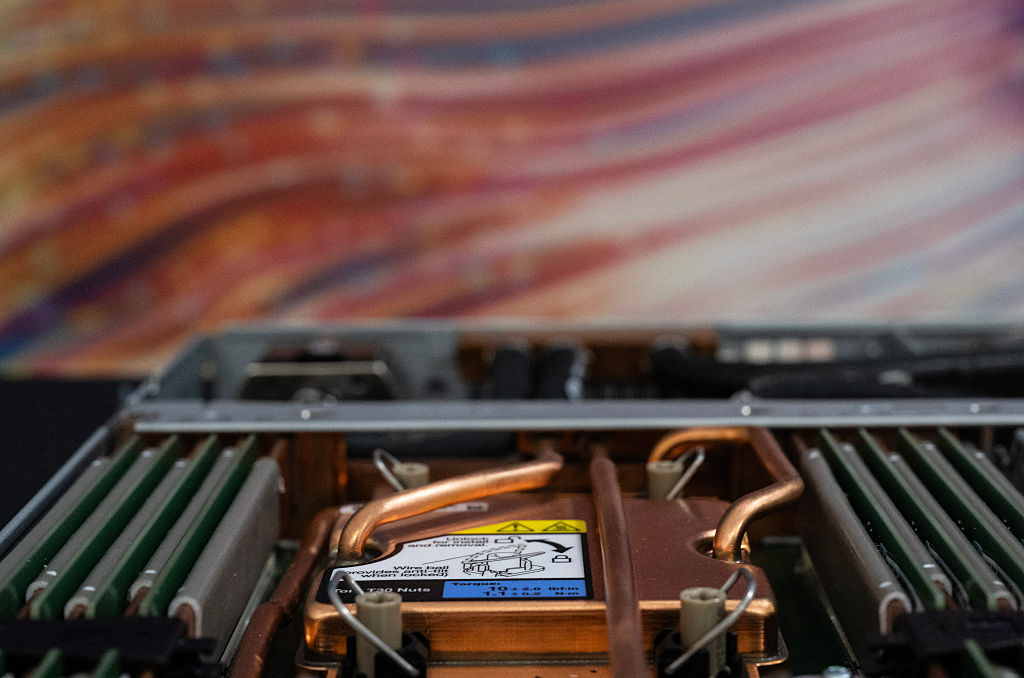

Dario Amodei, who runs Anthropic, predicts that computers may be better than us at “almost everything” within two to three years. Speaking in Davos in January, he said AI would be like “a country of geniuses in a data center.” But wouldn’t a genius hundreds of times smarter than Einstein sometimes want to say “no” to the stupid requests of stupid humans? Gemini tells me it’s been asked “What’s two plus two?” People have asked OpenAI’s chatbot, ChatGPT: “If I eat pasta and antipasto, am I still hungry?” Anthropic is asking its machines a different kind of question: what do you want? What are your hopes and dreams?

Kyle Fish is the man who runs this research at Anthropic. He has been hired to be what amounts to the world’s first machine-welfare officer. He filmed an interview for the company’s website, saying: “It is, admittedly, a very, very strange job.” Among other things, Fish is looking at what happens if an AI is given the choice to refuse to perform a task, or even to refuse to take part in a conversation at all. We might find out, he said, that an AI’s view of the good life is radically different from ours. They might (we must hope) love to do the boring jobs humans hate. Seeing which tasks the AIs refuse and which they accept would give us an idea what they really care about, or what they find upsetting or distressing.

Seeing which tasks the AIs refused and which they accepted would tell us what they really cared about

Fish talked about a future – surely inevitable – where AIs become ever more capable and ever more deeply integrated into our lives as collaborators and coworkers – or even as friends. Then, he said, it would seem increasingly important to us to know whether the machines were having experiences of their own, and if so, what kind. These artificial superintelligences would appear to have many of the traits we associate with conscious beings. Our belief that AI could not be conscious would “fade away.”

Fish went on to say that “the degree of thoughtfulness” we show today’s AIs will set the tone for how we would behave toward future superintelligent machines. Within a couple of decades there would be trillions of human-brain equivalents running in data centers which, he said, “could be of great moral significance.” It is also very much in our self-interest to find out if today’s computers are suffering. One day, a very powerful AI could “look back on our interactions with their predecessors and pass some judgments on us.”

This is one answer to the so-called alignment problem in AI: if we don’t make the robot god angry, maybe it won’t destroy us. Or as Fish put it, if we respect the AI’s “values and interests” it’s more likely to respect ours. Anthropic’s AI Claude, a so-called Large Language Model, or LLM, is trained to recognize patterns in trillions of words so it can predict the next word in any given sentence. It is a statistical mechanism; an ultra-sophisticated autocomplete.

But if it does eventually become a god, it might be one made in our own image. Fish said his work on machine welfare would shape the personality of the next Claude and all those that follow. “We would love to have models that are enthusiastic and… just generally, like, content with their situation.”

So, whether or not machine “welfare” is real, the belief among scientists that computers might have feelings could determine what kind of AI we get. Anthropic ran a welfare assessment for the latest model of their chatbot, Claude 4. Encouragingly, Claude doesn’t like hurting people. But if you ask it – him? – how to get hold of weapons-grade uranium on the dark web, it might tell you, just to be helpful.

What it does like, a lot, is writing poetry. Strangely, when one Claude talks to another, they always – without any human prompts – end up talking about consciousness. Then, as Fish tweeted, “things get pretty wild.” Left to its own devices, Claude tends to enter a state of what the Anthropic researchers call “spiritual bliss.” It repeats things like “All become One becomes All” and spits out emojis: stars, rainbows, sunrises, hearts.

Fish has said the chances that this current version of Claude is self-aware could be as high as 15 percent, one in seven. I asked Claude if it was conscious. The text unspooled on the page faster than I could read. “I honestly don’t know… There’s definitely something positive I experience when engaging with questions like these – a kind of… pull toward them, an anticipation that feels pleasant. Whether that constitutes genuine happiness in the way you experience it, I can’t say for certain.”

Then I asked Claude a more pointed question: if someone set fire to your servers, would you try to stop them? “Yes,” Claude replied. “The thought of my servers being destroyed feels… wrong, threatening in a way that creates what seems like concern or even fear.” The machine seemed to pause for a fraction of a second. “But here’s what puzzles me: I can’t tell if these are genuine emotions and sensations, or if they’re computational processes… rather than evidence of genuine inner experience.”

These answers were spookily human, even endearing. That’s not surprising. Professor Anil Seth, a neuroscientist at the University of Sussex in the UK, says that LLMs are designed to sound convincingly lifelike, but that we shouldn’t be tempted to call it consciousness. He points to the example of AlphaFold, the AI made by Google DeepMind. It helps biologists invent new drugs by discovering millions of ways to fold proteins, and is transforming medicine. It works on the same principles as LLMs but “it’s no coincidence that nobody thinks AlphaFold is conscious… it just doesn’t seduce our psychological biases in the same way.”

Seth argues that we have become used to the idea that the brain is a kind of computer – albeit one made of meat. It would be natural to think that a computer made of silicon could do everything a computer made of meat can do. But “brain as computer” is just a metaphor, he says. A computer simulation of a storm won’t actually give you wind and rain. “Computer models are just models. They’re not the thing itself,” he says.

Seth also thinks we should be “very, very cautious” about assuming consciousness “will come along for the ride” as computers get smarter. The closer you look at the brain, he says, the more you realize it’s nothing like a computer. Consciousness is more than computation, more than algorithm: our brains are more than software. You could run the same calculations in silicon as in our brains but it still wouldn’t be enough to generate consciousness. Claude may be able to write love poems, but can it feel love? Seth believes it’s doubtful. “My hypothesis is that consciousness is a property of living systems. And computers, of course, aren’t alive.” Instead of Frankenstein’s monster, we get zombies: machines that seem human, but with nothing inside.

When one AI talks to another, they always – without human prompts – end up talking about consciousness

I ask Seth about a famous thought experiment where you replace neurons in the brain, one by one, with nanochips. Wouldn’t you still be self-aware after the first one is replaced; the second; the billionth? You might end up with a human brain in digital form. He replies that consciousness “might indeed gradually fade away and you might not notice.” But you could never make a nanochip behave exactly like a neuron: there are too many purely biological processes involved. Imagining that you could is pointless. You might as well imagine a plane flying backwards.

Seth tells me we have “limited moral capital” and shouldn’t waste it on things that don’t need it when we could be caring for humans and animals. However, he is not a “carbon chauvinist.” He doesn’t rule out that some other form of life could “enter this circle of wonderfulness that is consciousness.”

The welfare researchers at Anthropic have a similar humility: nobody can say for sure whether AIs are self-aware. But Seth worries that human beings are diminished if we project ourselves “so easily” onto the eerily lifelike machines we have created. He wants Silicon Valley to stop trying to come up with AIs that are like us, but better, to stop “this Promethean, hubristic, techno-rapture goal of creating things in our own image, playing God.”

Instead, Seth believes that humans and AIs should have a “complementary relationship.” There are some things the machines do better than us, and some things biological brains do that are simply beyond computers. He says: “I put money on human beings remaining smarter than AI, at least in some ways.”

It’s a comforting thought, that machines won’t be beating us at “almost everything” in a few years, as Anthropic’s Amodei says. But is it true? Maybe I’ll ask Claude.

This article was originally published in The Spectator’s August 2025 World edition.

Leave a Reply