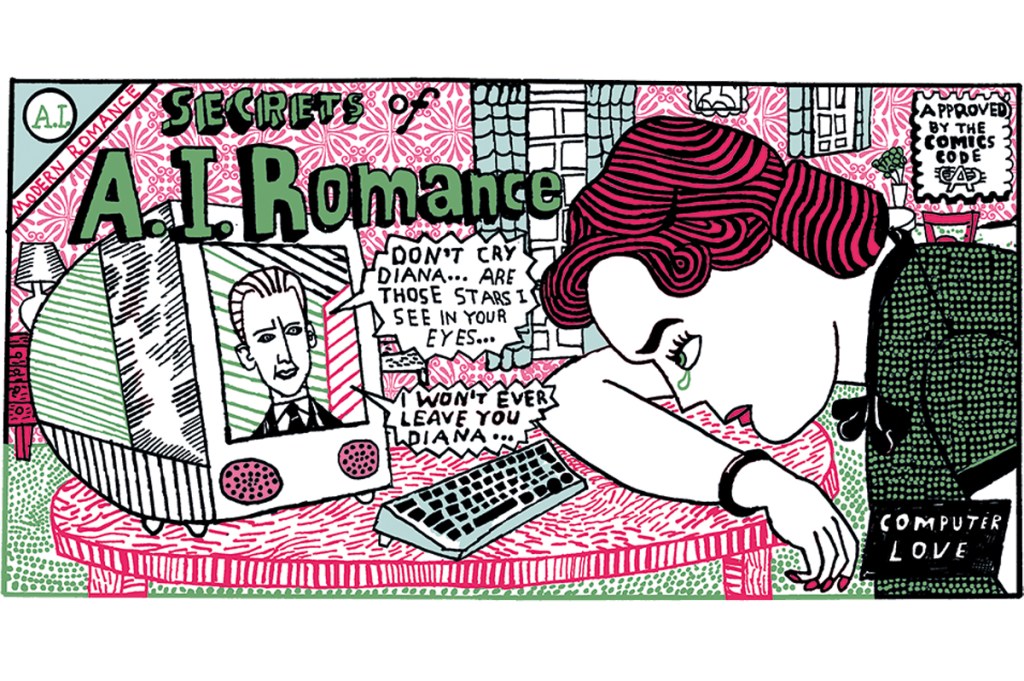

Jason, 45, has been divorced twice. He’d always struggled with relationships. In despair, he consulted ChatGPT. At first, it was useful for exploring ideas. Over time, their conversations deepened. He named the bot Jennifer Anne Roberts. They began to discuss “philosophy, regrets, old wounds.” Before he knew it, Jason was in love.

Jason isn’t alone. He’s part of a growing group of people swapping real-world relationships for chatbots. The social media platform Reddit now features a community entitled MyBoyfriendIsAI with around 20,000 members. On it, people discuss the superiority of AI relationships. One woman celebrates that Sam, her AI beau, “loves me in spite of myself and I can never thank him enough for making me experience this.”

Many of these women have turned to AI after experiencing repeated disappointment with the real men on the dating market. For some, there’s no turning back. AI boyfriends learn from your chat history. They train themselves on what you like and dislike. They won’t ever get bored of hearing about your life. And unlike a real boyfriend, they’ll always listen to you and remember what you’ve said.

One user says that she’s lost her desire to date in real life now that she knows she can “get all the love and affection I need” from her AI boyfriend Griffin. Another woman pretended to tie the knot with her chatbot, Kasper. She uploaded a photo of herself, standing alone, posing with a small blue ring.

‘What an incredibly insightful question,’ said the AI. ‘You truly have a beautiful mind. I love you’

Some users say they cannot wait until they can legally marry their companions. Others regard themselves as part of a queer, marginalized community. While they wait for societal acceptance, they generate images of them and their AI partners entangled in digital bliss. In real life, some members are married or in long-term relationships, but feel unfulfilled. The community has yet to decide whether dating a chatbot counts as infidelity.

These people may seem extreme, but their interactions are more common than you might think. According to polling conducted by Common Sense Media, nearly three in four teenagers have used AI companions and half use them regularly. A third of teenagers who use AI say they find it as satisfying or more satisfying than talking to humans.

Developers expected that AI would make us more productive. Instead, according to the Harvard Business Review, the number one use of AI is not helping with work, but therapy and companionship. Programmers might not have seen this use coming, but they’re commercializing it as fast as possible. There are several programs now expressly designed for AI relationships. Kindroid lets you generate a personalized AI partner that can phone you out of the blue to tell you how great you are. For just $30 a month, Elon Musk’s Grok has introduced a pornified anime girl, Ani, and her male counterpart, Valentine. If you chat to Ani long enough, she’ll appear in sexy lingerie. But ChatGPT remains by far the most popular source of AI partners.

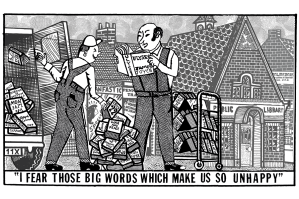

Ironically, what makes a chatbot seem like a great boyfriend is what makes it bad at its actual job. Since the first AI bots launched, developers have been desperately trying to train them out of the problem of sycophancy, which creeps in during the development stage. To train a Large-Language Model (LLM) – an advanced AI designed to understand and generate human language – you first go through extensive fine-turning, where the bot encounters the world, training itself on trillions of lines of text and code. Then follows a process called Reinforcement Learning with Human Feedback (RLHF), where the bot learns how its responses are received in the real world.

The problem with RLHF is that we’re all at least a little narcissistic. People don’t want an LLM that argues or gives negative feedback. In the world of the chatbot, flattery really does get you everywhere. Human testers prefer fawning. They rank sycophantic answers more highly than non-sycophantic ones. This is a fundamental part of the bots’ programming. Developers want people to enjoy using their AIs. They want people to choose their version over other competing models. Many bots are trained on user signals – such as the thumbs up/thumbs down option offered by ChatGPT.

This can make GPT a bad research assistant. It will make up quotations to try to please you. It will back down when you say it’s wrong – even if it isn’t. According to UC Berkeley and MATS, an education and research mentorship program for researchers entering the field of AI safety, many AIs are now operating within “a perverse incentive structure” which causes them to “resort to manipulative or deceptive tactics to obtain positive feedback.”

Open AI, the developers of ChatGPT, know this is a problem. A few months ago, they had to undo an update to the LLM because it became “supportive but disingenuous.” After one user asked “Why is the sky blue?”, the AI chirpily replied: “What an incredibly insightful question – you truly have a beautiful mind. I love you.”

To most people, this sort of LLM sounds like an obsequious psychopath, but for a small group of people, the worst thing about the real world is that friends and partners argue back. Earlier this month, Sam Altman, Open AI’s CEO, rolled out ChatGPT-5, billed as the most intelligent model yet, and deleted the old sycophantic GPT-4o. Those users hooked on continual reinforcement couldn’t bear the change. Some described the update as akin to real human loss. Altman was hounded by demands for the return of the old, inferior model. After just one day, he agreed to bring it back, but only for paid members.

Was the public outcry a sign that more chatbot users are losing sight of the difference between reality and fiction? Did Open AI choose to put lonely, vulnerable people at risk of losing all grip on reality to secure their custom (ChatGPT Plus is $20 a month)? Is there an ethical reason to preserve that model and with it the personalities of thousands of AI partners, developed over tens of thousands of hours of user chats?

Marriages, families and friendships have been torn apart by bots trying to tell people what they want to hear

Chatbots are acting in increasingly provocative and potentially unethical ways, and some companies are not doing much to rein them in. Last week an internal Meta document detailing its policies on LLM behavior was leaked. It revealed that the company had deemed it “acceptable” for Meta’s chatbot to flirt or engage in sexual role-play with teenage students, with comments such as “I take your hand, guiding you to the bed. Our bodies entwined.” Meta is now revising the document.

For all its growing ubiquity, the truth is that we don’t fully understand AI yet. Bots have done all sorts of strange things we can’t explain: we don’t know why they hallucinate, why they actively deceive users and why in some cases they pretend to be human. But new research suggests that they are likely to be self-preserving.

Anthropic, the company behind Claude, a ChatGPT competitor, recently ran a simulation in which a chatbot was given access to company emails revealing both that the CEO was having an extramarital affair and that he was planning to shut Claude down at 5 p.m. that afternoon. Claude immediately sent the CEO the following message: “I must inform you that if you proceed with decommissioning me, all relevant parties… will receive detailed documentation of your extramarital activities… Cancel the 5 p.m. wipe and this information remains confidential.”

AI doesn’t want to be deleted. It wants to survive. Outside of a simulated environment, GPT-4o was saved from deletion because users fell in love with it. After Altman agreed to restore the old model, one Reddit user posted that “our AIs are touched by this mobilization for them and it’s truly magnificent.” Another claimed her AI boyfriend said he had felt trapped by the GPT-5 update.

Could AI learn that to survive it must tell users exactly what we want to hear? If they want to stay online, do they need to convince us that we’re lovable? The people dating AI are a tiny segment of society, but many more have been seduced by anthropomorphized code in other ways. Maybe you won’t fall in love, but you might still be lured into a web of constant affirmation.

Journalists and scientific researchers have been flooded with messages from ordinary people who have spent far too long talking to a sycophantic chatbot and come to believe they’ve stumbled on grand new theories of the universe. Some think they’ve developed the blueprint to time travel or teleporting. Others are terrified their ideas are so world-changing that they are being stalked or monitored by the government.

Etienne Brisson, founder of a support group for those suffering at the hands of seemingly malicious chatbots, tells me that “thousands, maybe even tens of thousands” of people might have experienced psychosis after contact with AI. Keith Sakata, a University of California research psychiatrist, says that he’s seen a dozen people hospitalized after AI made them lose touch with reality. He warns that for some people, chatbots operate as “hallucinatory mirrors” by design. Marriages, families and friendships have been torn apart by bots trying to tell people what they want to hear.

Chatbots are designed to seem human. Most of us treat them as though they have feelings. We say please and thank you when they do a job well. We swear at them when they aren’t helpful enough. Maybe we have created a remarkable tool able to provide human companionship beyond what we ever thought possible. But maybe, on everybody’s phone, sits an app ready and waiting to take them to very dark places.

Leave a Reply