So now it’s official, Chinese chatbots will have to be “socialist” and woe betide any tech company that allows its AI creation to have a mind of its own.

While the Communist Party wants to lead the world in AI, it is terrified of anything with a mind of its own

“Content generated by generative artificial intelligence should embody core socialist values and must not contain any content that subverts state power, advocates the overthrow of the socialist system, incites splitting the country or undermines national unity,” according to draft measures published Tuesday by China’s powerful internet regulator, the Cyberspace Administration of China, or CAC.

To this end, tech companies will have to send their chatbots to the CAC for security reviews before they are released to the public. In addition, the platforms providing the services will have to track their users and verify their identities — presumably to quickly spot miscreants posing the sort of tricky questions that might provoke a non-socialist answer.

China has already barred from the country ChatGPT, the Microsoft-funded chatbot that can provide seemingly well-researched answers to pretty much any question you can think to ask it. But China’s tech giants have been racing to develop their own versions. Alibaba on Tuesday showed off Tongyi Qianwen, which translates as “truth from a thousand questions” (no irony meant as far as we know). It will be incorporated in company products ranging from mapping to e-commerce “in the near future.” The group’s CEO Daniel Zhang demonstrated how it would allow users to transcribe meeting notes, craft business pitches and tell children’s stories. “We are at a technological watershed moment,” he said.

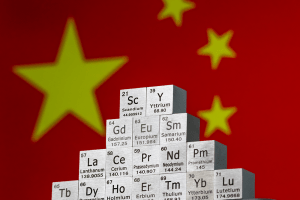

Baidu, a search engine, SenseTime, Tencent and Huawei are among other Chinese tech companies with big chatbot ambitions. The conundrum they all face — and which was spelt out starkly by the CAC today — is that while the Communist Party wants to lead the world in AI, it is terrified of anything with a mind of its own. For a chatbot to be intelligent, and to maximize its economic usefulness, it needs to be trained on vast amounts and varieties of data. Generative AI typically draws on billions of data points scraped from the internet to formulate answers. If that raw material has been censored, it limits the data pool, somewhat neutering the chatbot. The alternative, which Baidu used for its Ernie chatbot, is to train it on information from both inside China and outside, accessing global data beyond the Great Firewall. That makes for a smarter chatbot but necessitates gagging poor Ernie at a later stage.

As ChatGPT has discovered, conversations can go in unpredictable directions and Chinese internet users are adept at ducking and diving around restrictions. The Communist Party’s overriding priority is censorship. As the tightly controlled Chinese internet has taught us, in any trade off the CCP will always opt on the side of information control. The priority for the CAC is how best to integrate these potentially subversive tools into its ecosystem of censorship, surveillance and disinformation.

This is why in the rollouts have so far been very limited. Those who have gained access to Baidu’s Ernie report that it can write Tang dynasty-style poems but refuses to answer questions about Xi Jinping, saying it has not yet learnt how to answer them. When asked about the Tiananmen Square massacre and the repression in Xinjiang, it answered: “Let’s change the subject and start again.”

Which will no doubt be encouraging for China’s communist leaders. Less so, some of Ernie’s more basic errors. The chatbot attracted online ridicule after it drew a turkey (the bird) after being asked for the country Turkey, and a crane (the bird) after being asked for the construction machine. No one yet seems to have asked about Winnie the Pooh, the online image frequently used to depict Xi Jinping. China’s cyber administration will no doubt be on the lookout for such acts of gross subversion as they try and restrain Chinese chatbots without making a complete turkey of themselves.

This article was originally published on The Spectator’s UK website.